All Categories

Featured

Table of Contents

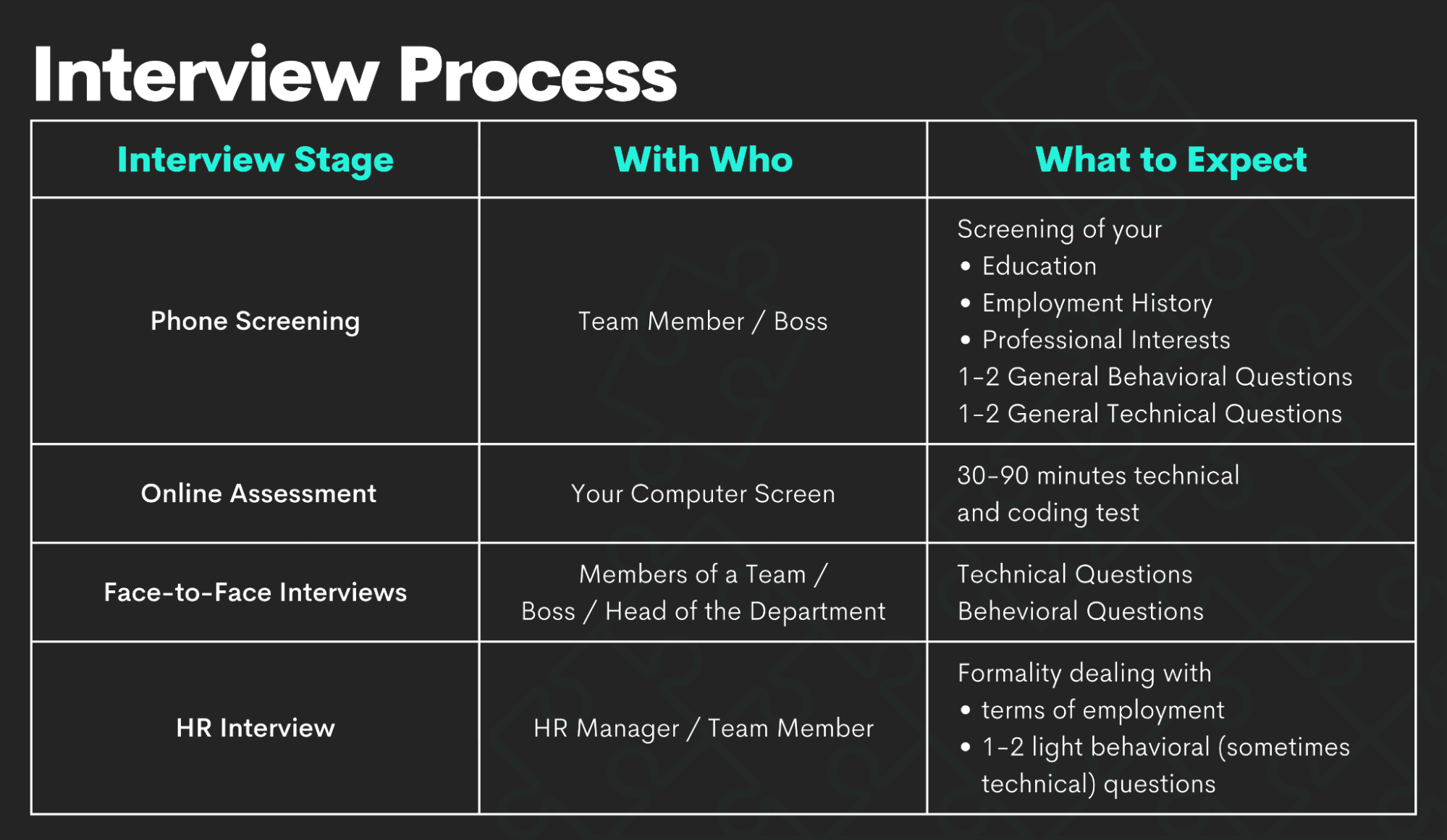

Amazon now commonly asks interviewees to code in an online document data. Now that you know what questions to anticipate, allow's concentrate on just how to prepare.

Below is our four-step preparation plan for Amazon data researcher prospects. If you're planning for more firms than simply Amazon, after that inspect our basic information scientific research meeting preparation guide. A lot of candidates fall short to do this. Prior to investing 10s of hours preparing for an interview at Amazon, you must take some time to make sure it's really the appropriate company for you.

, which, although it's designed around software application growth, ought to give you a concept of what they're looking out for.

Note that in the onsite rounds you'll likely have to code on a whiteboard without being able to perform it, so exercise composing with issues on paper. For device knowing and statistics inquiries, provides online training courses designed around statistical probability and various other useful topics, several of which are free. Kaggle likewise offers totally free programs around introductory and intermediate artificial intelligence, as well as information cleansing, information visualization, SQL, and others.

Pramp Interview

Make certain you have at least one story or example for every of the concepts, from a large range of settings and projects. Ultimately, an excellent method to practice all of these various sorts of concerns is to interview yourself out loud. This might seem weird, but it will considerably enhance the means you connect your responses during a meeting.

Breaking into FAANG companies as a machine learning engineer requires a deep understanding of algorithms, systems, and career strategies. Programs like Advanced ML Bootcamps for Engineers focus on building core competencies for tackling coding challenges. Participants gain insights into the ML job market for 2025, helping them navigate the complexities of FAANG interviews. By addressing behavioral question frameworks, these courses ensure learners are equipped for career success

Trust us, it works. Practicing by on your own will just take you thus far. Among the primary difficulties of data researcher interviews at Amazon is communicating your various responses in a way that's understandable. As an outcome, we highly advise practicing with a peer interviewing you. Preferably, a great location to start is to exercise with pals.

Breaking into FAANG companies requires strategic preparation and expert guidance. Cracking Facebook Tech Interviews. Programs like Interview Kickstart Reviews equip participants with critical skills for roles at Amazon. From mock interviews to technical preparation, these resources ensure readiness

Be cautioned, as you may come up versus the following troubles It's tough to know if the comments you obtain is accurate. They're not likely to have expert knowledge of interviews at your target business. On peer systems, people frequently squander your time by disappointing up. For these reasons, numerous candidates skip peer mock meetings and go straight to mock interviews with a specialist.

Google Data Science Interview Insights

That's an ROI of 100x!.

Typically, Information Scientific research would focus on maths, computer system science and domain name proficiency. While I will briefly cover some computer science basics, the bulk of this blog site will primarily cover the mathematical fundamentals one may either need to comb up on (or also take a whole training course).

While I recognize most of you reviewing this are extra math heavy by nature, realize the bulk of information scientific research (dare I state 80%+) is gathering, cleansing and processing information into a beneficial kind. Python and R are one of the most popular ones in the Information Scientific research area. Nevertheless, I have actually also found C/C++, Java and Scala.

Mock Data Science Interview

It is common to see the majority of the data scientists being in one of two camps: Mathematicians and Data Source Architects. If you are the 2nd one, the blog site won't aid you much (YOU ARE CURRENTLY AWESOME!).

This might either be accumulating sensor data, parsing sites or carrying out surveys. After accumulating the information, it needs to be transformed right into a useful kind (e.g. key-value shop in JSON Lines files). Once the data is accumulated and placed in a functional format, it is important to carry out some information top quality checks.

Key Data Science Interview Questions For Faang

In instances of fraud, it is really usual to have heavy class inequality (e.g. only 2% of the dataset is real scams). Such information is necessary to determine on the suitable options for function design, modelling and model evaluation. For additional information, inspect my blog site on Fraud Detection Under Extreme Course Imbalance.

In bivariate evaluation, each feature is compared to various other functions in the dataset. Scatter matrices allow us to discover hidden patterns such as- attributes that ought to be crafted with each other- features that may require to be gotten rid of to prevent multicolinearityMulticollinearity is actually a problem for numerous versions like linear regression and therefore requires to be taken treatment of appropriately.

In this section, we will certainly explore some typical attribute engineering tactics. At times, the attribute on its own may not provide helpful details. Visualize making use of internet usage information. You will have YouTube individuals going as high as Giga Bytes while Facebook Messenger users utilize a number of Mega Bytes.

An additional problem is the use of specific values. While specific values are common in the data scientific research globe, realize computer systems can just comprehend numbers.

Data Cleaning Techniques For Data Science Interviews

At times, having too several sparse dimensions will certainly obstruct the performance of the model. An algorithm typically used for dimensionality reduction is Principal Parts Evaluation or PCA.

The common classifications and their below groups are clarified in this section. Filter techniques are usually utilized as a preprocessing step. The choice of features is independent of any equipment learning algorithms. Rather, attributes are chosen on the basis of their scores in numerous analytical tests for their relationship with the result variable.

Usual approaches under this group are Pearson's Relationship, Linear Discriminant Evaluation, ANOVA and Chi-Square. In wrapper techniques, we try to make use of a part of features and train a version using them. Based upon the reasonings that we draw from the previous model, we make a decision to include or get rid of functions from your part.

Algoexpert

Common techniques under this category are Ahead Selection, In Reverse Removal and Recursive Attribute Elimination. LASSO and RIDGE are common ones. The regularizations are offered in the formulas below as reference: Lasso: Ridge: That being stated, it is to comprehend the mechanics behind LASSO and RIDGE for interviews.

Unsupervised Discovering is when the tags are inaccessible. That being said,!!! This error is sufficient for the recruiter to cancel the interview. Another noob error individuals make is not normalizing the features before running the version.

Linear and Logistic Regression are the most fundamental and commonly used Machine Knowing algorithms out there. Before doing any analysis One usual meeting slip individuals make is beginning their evaluation with an extra complex model like Neural Network. Benchmarks are crucial.

Latest Posts

How To Fast-track Your Faang Interview Preparation

How To Prepare For Amazon’s Software Engineer Interview

10 Mistakes To Avoid In A Software Engineering Interview